Feature scaling, also known as data normalization, is a technique used in machine learning and data analysis to standardize or transform the range of features or variables in a dataset. It involves adjusting the scale or distribution of the features to ensure that they are on a similar scale and have comparable ranges.

The need for feature scaling arises when the features in a dataset have different units of measurement or varying magnitudes. This discrepancy can negatively impact the performance of certain machine learning algorithms that are sensitive to the scale of the input features.

For Eg: In our data set, we may have an age feature and a height feature(in feet). While Age can range anywhere from 1 to 100 or even more, the upper limit of Height may not even reach 15. In these cases, The Model may become more biased towards age and give false predictions.

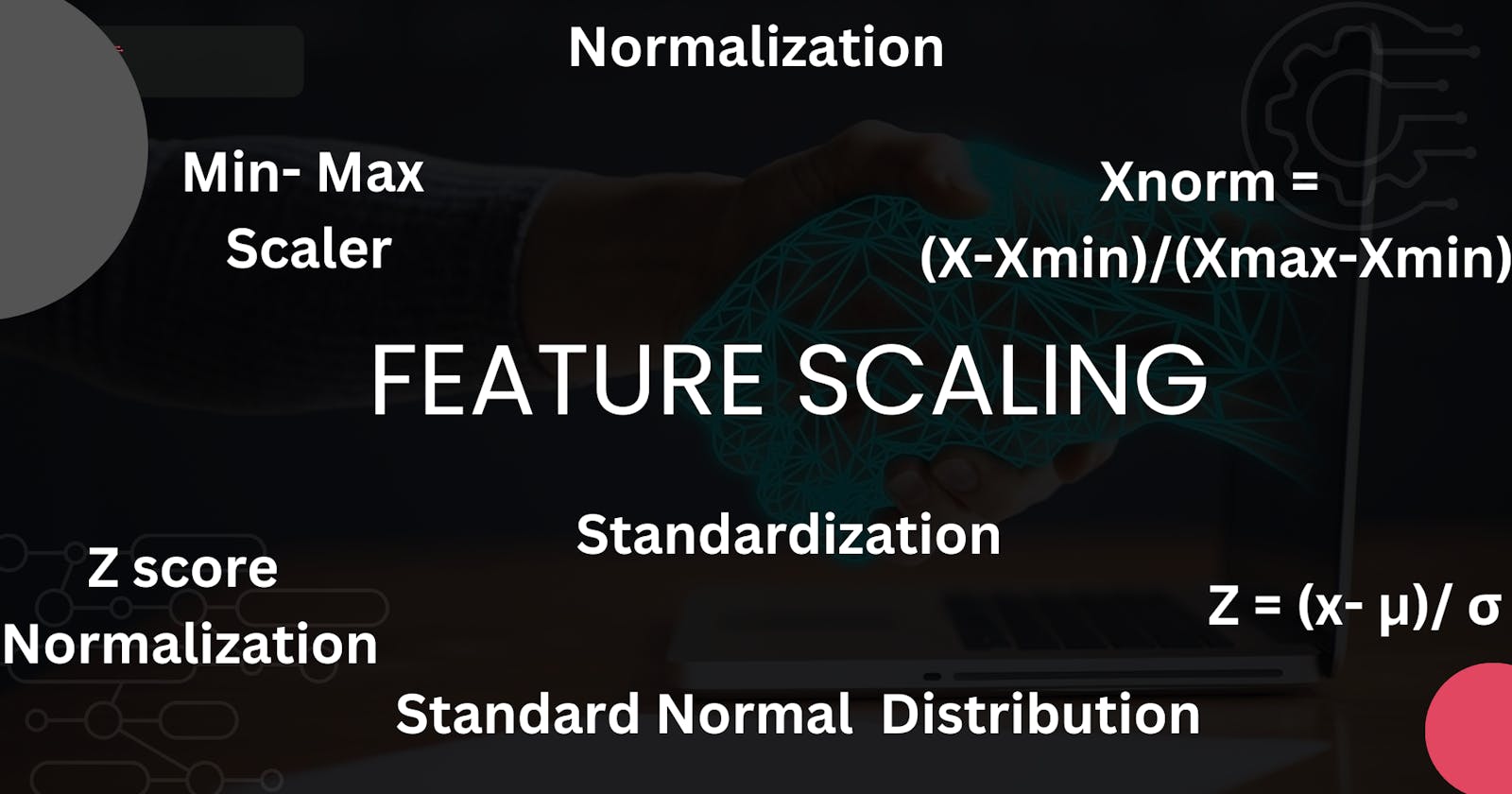

We have Two Commonly Used techniques for Feature Scaling :

Normalization

Standardization

Normalization

This method scales the features so that their magnitudes fall within a specified range. We Commonly use normalization techniques like Min- Max Scaler To achieve this.

Min - Max Scaler

Min Max Scaler transforms the feature values to the range 0 to 1. This is achieved By using the below formula:

$$Xnorm\space= \frac{X-Xmin}{Xmax-Xmin}$$

Where,

Xnorm is the original value of a feature,

Xmin is the minimum value of that feature in the dataset,

Xmax is the maximum value of that feature in the dataset,

Xnorm is the transformed value within the desired range (0 to 1).

Python Code to practically implement it:

import numpy as np

def min_max_scaling(feature):

min_value = np.min(feature)

max_value = np.max(feature)

scaled_feature = (feature - min_value) / (max_value - min_value)

return scaled_feature

# Example usage

original_feature = np.array([2, 5, 10, 15, 20])

scaled_feature = min_max_scaling(original_feature)

print(scaled_feature)

In this code snippet, we define a function min_max_scaling that takes a feature as input and returns the scaled feature. It calculates the minimum value and maximum value of the feature using the np.min and np.max functions from the NumPy library. Then, it applies the min-max scaling formula to each value in the feature using element-wise operations in NumPy.

In the example usage, we create an array original_feature with some arbitrary values. We then call the min_max_scaling function on original_feature and store the scaled feature in the scaled_feature variable. Finally, we print the scaled feature to observe the transformed values.

Standardization

It is Used to transform a feature such that it follows the properties of standard normal distribution ( Mean =0, Standard Deviation = 1 ). It is achieved using techniques like Z score normalization.

Z score Normalization

$$z=\frac{x-µ}{\sigma}$$

where

z is the transformed value with a mean of zero and a standard deviation of one.

µ is the mean value of that feature in the dataset,

σ is the standard deviation of that feature in the dataset,

x the original value of a feature.

Practical implementation in Python:

import numpy as np

def z_score_normalization(feature):

mean = np.mean(feature)

std_dev = np.std(feature)

scaled_feature = (feature - mean) / std_dev

return scaled_feature

# Example usage

original_feature = np.array([2, 5, 10, 15, 20])

scaled_feature = z_score_normalization(original_feature)

print(scaled_feature)

In this code snippet, we define a function z_score_normalization that takes a feature as input and returns the scaled feature. It calculates the mean and standard deviation of the feature using the np.mean and np.std functions from the NumPy library. Then, it applies the z-score normalization formula to each value in the feature using element-wise operations in NumPy.

In the example usage, we create an array original_feature with some arbitrary values. We then call the z_score_normalization function on original_feature and store the scaled feature in the scaled_feature variable. Finally, we print the scaled feature to observe the transformed values.

In the end, whether you choose standardization or normalization, remember that both techniques serve a purpose in data preprocessing. The key lies in understanding your data, the context of your analysis, and the specific requirements of your model. So, embrace the power of standardization and normalization, harness their benefits, and let them guide you towards more accurate and robust insights."